Address your AI risk

Reach out to us to conduct an AI audit to enhance the security

of your models against potential AI risks and weaknesses.

USE CASE 1

AI Privacy & Security

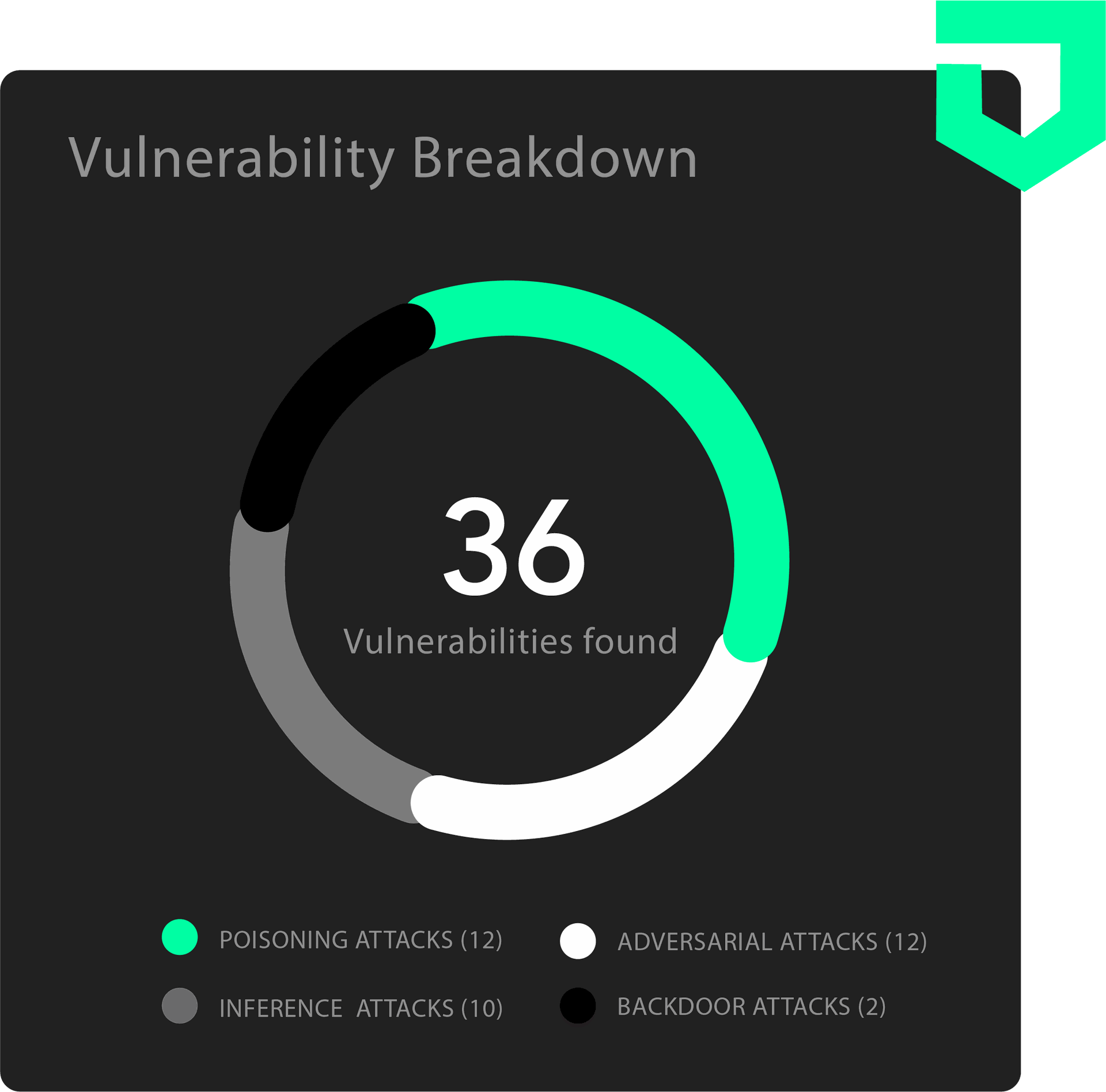

Simply put, AI model security and privacy encompass the

protection of artificial intelligence models and the sensitive

data they handle from unauthorized access, manipulation, or

disclosure. Treats usually span from

data poisoning and breaches, to adversarial,

inferences or backdoor attacks.

From development to production, it is crucial to prioritize the security of AI systems as a vulnerability could lead to inaccurate and faulty predictions.

Strengthen your models against these threats by utilizing our unlearning methods, Differential Privacy (DP), Zero-Knowledge Machine Learning (ZKML) and Fully-Homomorphic Encryption (FHEML) approaches.

USE CASE 2

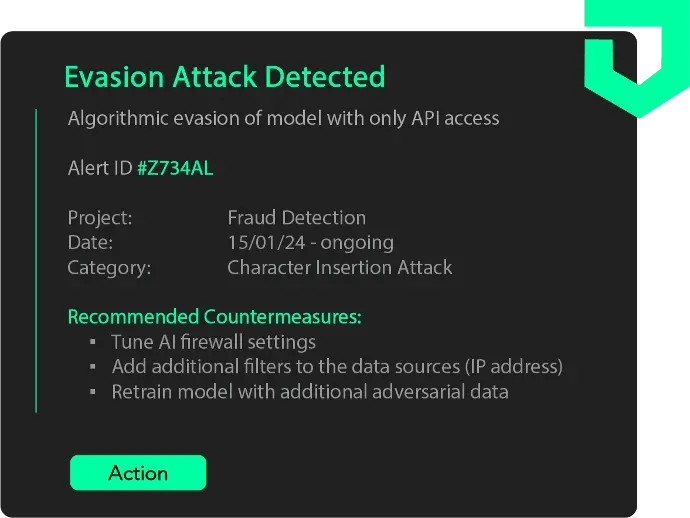

AI Model Robustness

AI model robustness refers to the ability of an artificial intelligence model to maintain its performance, accuracy and soundness even when it encounters unforeseen or adversarial inputs or conditions such as adversarial attacks, model poisoning, and model evasion or extraction. A robust AI model should be able to generalize well to new data and resist these types of attacks or perturbations without significant degradation in performance.

Using the world's largest AI Security Arsenal, we are able to provide you with an all-encompassing suite of powerful generative adversarial defense methods and innovative enhancements to bolster the resilience of your models against AI-related risks.

USE CASE 3

AI Model Scaling

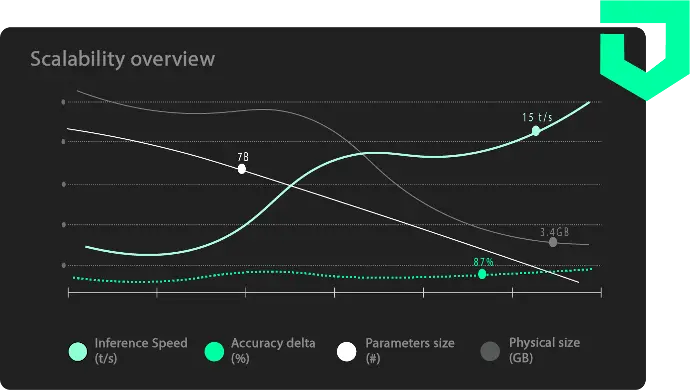

Technically speaking, model scaling involves enhancing the capacity and performance of AI models to handle larger datasets and more complex computations, which is a crucial step for improving the accuracy and efficiency of AI applications in real-world scenarios.

While modern Neural Networks are relatively speaking the most

scalable form of Machine Learning, state-of-the-art LLMs and

image synthesis models still induce heavy server costs for their

inference. Using Defaince’s

automatic distillation API, we shrink your

model down to consumer-friendly sizes and

save inference costs without forcing a full or

partial re-train on your architecture.

USE CASE 4

AI Governance & Compliance

AI governance and compliance encompass the creation of frameworks to ensure the lawful, responsible, and ethical development of AI. This involves establishing policies for data management, promoting transparency, fostering accountability, and mitigating risks throughout the entire AI lifecycle.

Threats to governance and compliance usually arise from data breaches, biases in decision-making, and model divergence over time. Therefore, enhancing AI governance and compliance necessitates embracing ethical principles, implementing robust policies, conducting regular audits, engaging with stakeholders, and continuously refining practices based on feedback and emerging best practices in the industry.

Mitigate your AI risk

Always First

Be the first to find out all the latest news, products, and trends.

By subscribing I agree to Defaince's Privacy Policy. I

understand that I can unsubscribe at any time.